In our last security guide, we covered WordPress security in depth. Today, we’re going to show you how to harden your server against attacks.

Hardening your WordPress installation is a vital first step, so if you haven’t read through the first article, go and read it now.

That said, there are plenty of ways for a hacker to damage your website without even touching WordPress. If your server is vulnerable, they can alter or delete any of the content on your site, steal your customer data, or use your machine for their own criminal games.

Standard Linux servers have pretty weak security out the box, so you have to take matters into your own hands. Alternatively, you can migrate to a specialist WordPress hosting company – they take care of the security for you so you can focus on your content.

If you find your head spinning as you read this guide, that may be the best choice for you.

That said, managing your own web security is totally do-able.

Table Of Contents

- 1. Hardening Your Server

- 2. Tools of the Trade

- 3. How Hacks Happen

- 4. Security – An overview

- 4.1. Know Your System

- 4.2. Principle of Least Privilege

- 4.3. Defense In Depth

- 4.4. Protection is Key but Detection is a Must

- 4.5. Know Your Enemy

- 4.6. Proactive Vs Reactive

- 5. It’s Not Just WordPress That Gets Hacked

- 6. Access Control

- 6.1. Discretionary Access Control

- 6.2. Permissions

- 6.3. Owner Permissions

- 6.4. Public Permissions

- 6.5. Group Permissions

- 6.6. Putting it Together

- 6.7. Directories Are Weird

- 6.8. Elevated Privileges

- 6.9. A New Group Appears!

- 6.10. Inhuman Users

- 6.11. DAC Summary

- 6.12. Capabilities

- 6.13. Mandatory Access Control

- 6.14. SELinux

- 6.15. AppArmor

- 6.16. GRSecurity

- 6.17. Application Level Access Control – Apache

- 6.18. Application Level Access Control – WordPress

- 7. Network Based Attacks

- 8. Firewalls

- 9. Intrusion Detection

- 10. Denial of Service Attacks

- 11. Slowloris

- 12. DNS Attacks

- 13. Hiding Your Server

- 14. How CDNs Protect Themselves

- 15. IP Leakage

- 16. Cryptographic Concepts

- 17. Cryptographic Hash Functions

- 18. Cryptographic Salts

- 19. Cryptographic Functions

- 20. Public and Private Keys

- 21. Magic Server Boxes

- 22. Virtual Machines and Containers

- 23. The Drawbacks of Virtual Machines

- 24. Introducing Containers

- 25. Docker

- 25.1. Deploying With Containers

- 25.2. Getting Started With Docker and Containers

- 25.3. What are Cgroups?

- 25.4. What are Namespaces?

- 25.5. The Docker Daemon

- 25.6. The Docker Client

- 25.7. Docker Hub

- 25.8. How to Build an Image – the Low-Level Way

- 25.9. A Better Workflow

- 25.10. Dockerfiles

- 25.11. Docker Volumes

- 25.12. Docker Compose

- 25.13. Docker Security Warning

- 25.14. WordPress is Complex

- 25.15. Simpler Maintenance

- 25.16. Microservices

- 25.17. Using Multiple Containers for your WordPress Site(s)

- Step 1: Bare Bones Installation

- Securing Your Host Machine

- Installing Tripwire

- RKHunter

- Set Up SSH Certificates on Both Machines

- Prevent Super User SSH Access

- Setting Up a Firewall

- Installing Fail2ban

- Step 2: Set Up Docker on the New Machine

- Step 3: Transfer Your Site Data

- Set Up a Workspace Folder

- Exporting Your Existing WordPress Site

- One Site or Many?

- Database Containers for Multiple Sites

- Step 4: Importing Your Site into New Wordpress and Database Containers

- Step 5: Launch Your Site Inside the Docker Containers

- Step 6: Accessing Your Site on the New Server

- Step 7: Check the Site Works

- Step 8: Tightening Container Security

- Optional Step – Server Image

- Step 9: Complete the Migration

- Step 10: Automated Backups

- Step 11: Maintaining Your Server

- Summary

1 Hardening Your Server

Any computer system is only as secure as its weakest element. I previously said that most hackers target the host – the machine running your site. This is true.

To be honest, if you had a totally secure WordPress site installed on a weak server, it would still be very easy for a skilled hacker to compromise it.

Not all hackers are skilled – most have a few tricks up their sleeves, and they give up if they can’t get a result. They usually make a huge list of potential targets, and then look for the weak sites they can take down.

With the steps above, you’ve taken yourself out of the “really easy” category – at least as far as your WordPress site is concerned.

While there are millions of unskilled hackers picking off weak targets, there are still thousands of skilled hackers looking for more challenging victims. To them, you’re still a sitting duck.

In this section, we’re going to share some advanced methods to ramp up your site’s security.

You will have to learn some security concepts as you go along – and there’s quite a lot of ground to cover. Our goal in writing this article was to make a complete guide that anyone can follow, regardless of their background. However, it’s possible you may find some of this stuff too technical.

If you feel that any of these ideas are too techy for you, that’s fine. There’s no shame in delegating complex tasks to professionals. You can hire someone to implement these steps, or you can host your site with a company that specializes in WordPress security (like Kinsta).

That said, it’s worth your time to gain an understanding of this stuff, even if you pay someone else to do it for you. That way you’ll know if they have done a good job or not!

We’re going to take a whirlwind tour of server security concepts, and then we’ll use these ideas to build a secure server.

Then we’re going to use some cutting-edge technology to isolate our WordPress site in a specially contained environment, where it can’t harm the system.

Next, we’ll cover methods you can use to harden WordPress against the most common attacks. You’ll also learn how to defend yourself against attacks that haven’t even been discovered yet!

We’ll cover intrusion detection tools you can use to recognize subtle attacks, so you can act fast and fix your server.

Then we’ll talk about keeping your site secure into the future.

2 Tools of the Trade

WordPress has a nice, simple visual interface. That’s great for day-to-day use – it shelters you from the horror of editing code by hand or configuring your host machine via the command line.

In this advanced section, we will be going beyond the simple actions you can perform through the WordPress admin panel. For some of our readers, this will mean using unfamiliar tools – especially the command line.

If you’ve never used a command line shell before, you may wonder why anyone would bother. Surely it’s a primitive way to control a computer?

For a long time, if you wanted to use a computer, you only had one choice – learn to use a command line shell! In the mid 80s and 90s, graphical interfaces started to appear, and they made it much easier for newbies to interact with their machines. And, in some cases, graphical interfaces speed up the workflow.

But that isn’t always the case. In a great many cases, a text based shell allows you to get the job done faster. True, you do have to invest a little time learning how to use a text only interface, but it’s not as much time as you would think. It’s time well spent, as you’ll gain a huge degree of control over your host machine.

When you connect to a text shell on a remote machine, you should use SSH – which stands for “secure shell”. SSH is a protocol that uses encryption to secure your connection, and it’s an absolute must.

In the section on recovering from a hack, we used SSH to remove malware. I mentioned that Macs and Linux PCs have SSH installed by default – Windows doesn’t. You can install a free SSH client for Windows called PuTTY – it’s the most popular choice, and it does the job well.

While you can do a lot over SSH, editing files can be a little tricky. There are good shell based text editors such as Vim, Emacs and Nano. They present their own learning curve – in the case of Vim and Emacs, it’s quite a steep learning curve! Text editing is also one of those areas where graphical interfaces do a better job – especially for code.

If you don’t want to spend the time getting to grips with one of the shell based code editors, you could simply edit the files on your machine and upload them to the server with an FTP program. This can quickly become a chore when you make multiple changes in a short time – especially when you have to change the file permissions of your uploaded files each time.

Alternatively, you can use a service like Cloud 9. Cloud 9 is a complete cloud based IDE (integrated development environment) for Linux based software. An IDE is like a text editor – but it’s optimized for code. It has tools for debugging and testing, and its goal is to make programmers more productive.

Cloud 9 has an option to link the editor to your server box via SSH – it’s one of their premium services (ie. you can’t do this on a free account). The benefit of using software like C9 is that you can use a visual interface to browse directories and edit files, and you can use the shell to execute commands. It’s the best of both worlds.

As you secure your site, you will certainly have to edit some code – an IDE can help you spot and eliminate mistakes, and it can speed up the process for you. When you edit the file on the remote machine, the permissions will remain unchanged (if you don’t know what I’m talking about, it will become clear later on in the article).

If you don’t want to pay for Cloud 9, you can use a more traditional IDE like Eclipse. With Eclipse’s “Remote System Explorer” you can access the remote machine as if it were on your PC – behind the scenes, the IDE uses SSH and SFTP to modify the host machine.

IDEs are sophisticated apps with hundreds of features – and you only need a few of them for your task. Don’t feel you have to learn the entire system before you can get started!

Also, you can access multiple SSH sessions at the same time. So you can open two PuTTY windows, or use PuTTY and an IDE side-by-side.

Now you’re armed with the tools you need, let’s broaden your understanding of security. Then we’ll dive into the server specifics.

3 How Hacks Happen

In reality, most hacks are opportunistic. You get hacked because an attack script identified weaknesses in your site or server. New weaknesses are discovered every day, and these lead to exploits and hacked sites.

Opportunistic hackers use an array of tools to identify potential targets. It all starts with a list of exploits. Let’s say a weakness has been discovered in a popular slideshow plugin.

The hacker learns about this weakness and investigates the plugin. They learn that there’s a recognizable “footprint” for the plugin – every site that uses it has the text “Powered by MyCoolSlideShowPlugin”.

From this point, it’s easy to scrape Google to build a huge list of hackable sites. So that’s what the attacker does.

Then they write a simple script to perform the hack, they load up their list of targets and set it lose. The script goes out onto the web and attempts to hack every site on the list.

Some hacks will succeed, many will fail. The script will record the sites that are susceptible to the weakness. After the initial run, the attacker can go back to the list and filter it to find the most popular sites.

At this point, they have a number of options. They could insert links into the site to boost their SEO rankings. Hackers can make a killing selling links from exploited sites. Alternatively, a hacker could deface the site and demand money to restore it (ransom).

They could even place ads on the site and use the traffic to make money.

In most cases, an attacker will also install backdoors into the server. These are deliberate security holes that allow them to come back and exploit the site in the future – even if the insecure plugin has been replaced.

From the viewpoint of the victim, it can feel like persecution. Their site keeps getting hacked again and again.

The point is that most of these attacks are not personally motivated. It doesn’t matter if you’ve led a blameless life and have no enemies. If your site is exploitable, it’s only a matter of time until someone does exploit it.

So it’s in your best interest to ensure your site is not an easy target.

You may be asking yourself – how do weaknesses come about, and how do they get discovered?

To begin with, most programmers are focused on a single goal. They want to make software that works – whether it’s a theme, plugin, or full-blown application.

They have a set of requirements and features, and they’re highly focused on getting them implemented. They usually work with tight deadlines, so they don’t have much time for any other concerns.

Many developers are very weak in the area of security (not all, of course). They may think it’s not an issue because the plugin they’re working on doesn’t handle sensitive data.

Unfortunately, under WordPress, every plugin and theme has the ability to alter anything on the site. They can even be exploited to infect other apps and sites hosted on the same machine.

As soon as the plugin works, the developer releases it. If it’s useful or has cool features, hundreds or thousands of people will download it.

Once the plugin is released, it also comes to the attention of hackers. All hackers are fascinated by how things work – whether they’re ethical hackers or black hats.

They want to know how the code works, and how to make it do things it wasn’t designed to do. Maybe they plan to exploit it. Maybe they want to warn others about security risks. Maybe they’re just bored and want to entertain themselves.

Fundamentally, at the most basic level, all code works in the same way – it takes input, processes it, and spits out output. When it gets an expected input, it should produce a sane output. The trick is working out which inputs will make the code do something unexpected – which inputs create unexpected output.

Quite often, that unexpected output is harmful. It could damage vital data, or expose private information. In these cases, the hacker has discovered an exploit. If the input only causes the app to crash or act funny, it’s not an exploit as such – it’s more correct to call it a bug.

Some people consider all exploits to be bugs, and that makes sense. A program should be secure – if it can be tricked into doing something insecure, then that’s certainly an unwanted “feature”.

Finding exploits isn’t easy – you have to read the code and really understand what it’s doing. You have to trace the input data through the code, and recognize the places where it can do something dangerous. With experience, you learn to spot the weaknesses.

After a hacker has found an exploit, one of several things will happen:

- They exploit it, causing damage to as many targets as possible. This is the worst-case-scenario.

- They publish it – often in the hopes that the original developer will fix it.

- They contact the publisher discretely and tell them to fix it. Then they publish the exploit.

When the exploit is published before the fix has been made, it’s called a “zero-day exploit.” From this moment, until the exploit is fixed, the users are at risk.

Once the cat’s out the bag, other hackers will learn about it. The exploit is public knowledge – but the issue hasn’t been fixed yet. It may be a quick fix, but often it takes a while for the project’s team to put together a patch. They need to test their fix thoroughly – a small change to the code could cause other bugs or security weaknesses.

In the meantime, the plugin’s users are at risk. If the developer is aware of the exploit, they’ll work fast to fix it and publish the update. But people can be slow to apply these updates – some sites are running plugins that were patched years ago. Until the plugin is updated, their site is at risk.

Lacking real programming skill isn’t as much of a handicap as you may think. There are “exploit kits” that automate the process of cracking websites using known exploits. These tools are used by security experts to spot weaknesses in their companies’ defenses – they’re also used by black hats to cause chaos on the web.

An exploit kit, like Metasploit, has a huge database of exploits, together with scripts to use them. It also has tools to customize and deliver malware payloads to compromised servers. Armed with these tools and a small amount of knowledge, an inexperienced hacker can wreak havoc.

According to WP White Security, 29% of WordPress sites are hacked through an insecure theme. Some innocent looking code buried deep in the theme allowed an attacker to gain a foothold in the system.

Theme developers are often relatively inexperienced coders. Usually, they’re professional graphic artists who have taught themselves a little PHP on the side.

Plugins are another popular line of attack – they account for 22% of successful hacks.

Put together, themes and plugins are a major source of security trouble.

4 Security – An overview

We’ve examined how exploits are discovered and used to hack sites. Your goal is to protect your site from such an attack. To do that, you need a good understanding of security.

So, what are the key ingredients of cyber security?

- Know Your System

- The Principle of Least Privilege

- Defense in Depth

- Protection is the Key but Detection is a Must

- Know Your Enemy

Know Your System

Before you can secure your site, you need to understand how your system works. The deeper your understanding, the more easily you can spot and fix weaknesses.

To begin with, you should develop a good understanding of how your WordPress site works. Specifically, you need to know which Plugins are responsible for which features, what custom code has been added, and so on.

But the “system” includes the server environment, too. That’s why we’ll be covering server security at the end of this article.

Principle of Least Privilege

Each person who uses your system should only have the privileges they need to perform their tasks.

There’s no reason for a web designer to have root access to your server. A website visitor should only be able to access the public pages on your site. Writers should be able to log on and write content – they shouldn’t be able to install plugins or new themes.

Understand who is interacting with your site, and understand which privileges they require to perform their tasks. Give them that much access – no more, no less.

Defense In Depth

Don’t depend on a single security measure to keep your server safe. You need multiple rings of defense. The more layers you deploy, the harder it is to break through and hurt your site.

In other words, don’t install a single security plugin and think you are safe! Put as many barriers as possible in a potential hacker’s path. That way, only the most obsessively dedicated hacker will ever persist to break your site.

Protection is Key but Detection is a Must

You should do everything you can to make your site secure. But even then, don’t get complacent. You should have some way to detect an attack, so you can understand how it happened and prevent the same thing from happening in the future.

There are no guarantees in security – even the most ironclad sites get hacked from time to time. If your site ever gets hacked, you need to know as soon as possible.

Know Your Enemy

You should understand the methods people use to deface or hack websites. This allows you to strengthen your defenses.

Most of us are not malicious hackers – we don’t have the experience or the skillset. That’s our misfortune.

To know how to make systems more secure, you have to understand how they can be broken.

Security professionals study cybercrime in depth and they usually learn how to commit these crimes. They learn under controlled conditions, and they never hurt real targets.

At security conventions (like Black Hat US), they rub shoulders with real hackers. They’re both there to learn how systems get attacked – the only difference is their goals.

Learning to think like a hacker opens your eyes to the holes in your own security.

Proactive Vs Reactive

Most people have a reactive approach to security. When something gets broken, they work out how to fix it. When a security update is released, they download it.

This protects you against known exploits, which have been published or detected “in the wild”. It’s important to fix known problems, but it’s only part of the job.

But what about the exploits we don’t know about? I’m talking about so-called zero-day exploits and future attacks that haven’t even been invented yet.

An exploit must always be discovered before it can be fixed. That means there’s a period of vulnerability.

If the person who discovers the exploit is an ethical hacker, then they’ll probably show the developer how to fix the exploit before they publish it. If the discoverer is an unethical hacker, they’ll try to exploit it for as long as possible.

Proactive security means defending yourself against unknown threats. You assume your software will be exploited at some point, so you take action now to limit the damage.

With a good plan in place, it can be very easy to recover from an attack. If you don’t have a plan, it can be almost impossible.

For instance, your plan should definitely involve backing up your vital data. If someone deletes your database, you won’t be able to recover your content unless you can find a copy somewhere.

It’s easy to recover the data if you have a recent copy. If you don’t, you’ll have to piece it together from public copies (archive.org) and random files on hard drives. Not an easy task.

In the rest of this article, we’ll outline a proactive security plan. No plan is perfect, and we can’t guarantee your site won’t be hacked at some point in the future.

If you follow our advice, you should be able to minimize the possibility of such an attack, and you will be able to recover quickly.

5 It’s Not Just WordPress That Gets Hacked

When you visit a WP site, WordPress is the most visible component. It’s not the only software installed on your server. Server environments are complex systems, with thousands of moving parts. Security exploits exist at all levels of the technology stack, from the hardware up.

WP White Security revealed that 41% of WordPress sites are hacked through a weakness in the web host. Some software component running on the server machine had a security hole, and a hacker exploited it.

Often, this weakness has nothing to do with the web service.

This is massive, so I’ll give you a moment for it to sink in. The biggest single source of danger has nothing to do with WordPress.

For hackers, this is great news. A server exploit is much more tempting because it allows them to attack any kind of site – WordPress, Joomla, static HTML, anything.

The remaining 8% of sites are hacked because of a weak password. While it’s important to use a strong password, password cracking is not a primary focus for hackers. That’s because there are simpler and more effective ways to attack sites.

As a footnote, a tiny number of sites (less than 1%) are hacked through the WordPress core files. This just goes to show how thorough the WordPress team is at closing security holes.

Understanding Server Security

Server security is a big subject – we could easily fill several books covering all the details – in fact, many authors have! Nevertheless, the basic concepts are quite easy to grasp.

Most public web hosts run Linux, so we’re going to cover Linux server security. Many of these concepts apply to other platforms, but the implementation details will obviously be different.

To really get a good grasp of security, you need a good basic understanding of how the operating system works. Here’s an incredibly simple overview.

How Your Server Box Works

In essence, a Linux machine is a set of files on a disk or SSD.

Of course, that’s a little like saying a tree is basically a seed.

An operating system is in motion – it responds to input and produces output, and it allows other processes to do the same. A seed requires water, sunlight and time to turn into a tree. An operating system requires a processor, memory, and other devices.

When a seed turns into a tree, we call it growth. When a Linux machine comes to life, we call it bootstrapping (or booting).

In its “living” state, a Linux OS is a file system together with a bunch of processes in memory. True, there are other components, such as input devices and output devices.

Linux has a special way of dealing with these devices – it treats them as files. This makes it much easier for programmers to deal with input and output, as they can treat everything as a file.

When they want to change a hardware setting, they write to the “file” that represents the hardware. If they need input from a device, they “read” the file.

Now an operating system is useless if you can’t use it to get things done. There are millions of ways you can use a computer, but in general what we want to do is take some input, process it, and produce an output.

Users achieve this by sending data to a process that is already running. This is a very important point – it has a big bearing on security. Before you can interact with the computer, there must be a running process listening for your input.

It’s like a courier trying to deliver a parcel. She knocks on the door, but if there’s nobody inside, she has to take the parcel back to the depot.

Maybe you’re trying to request a web page from a machine over the net. If there’s a web server running on that machine, you’ll get a response – hopefully, you’ll get the web page you are looking for. But what happens if there is no web server?

Nothing happens. Eventually, your web browser gives up and tells you the connection has timed out.

In very broad terms, users interact with running processes through the operating system – specifically through the kernel. These processes take the data and do something with it. Then they produce some output – again, they go through the kernel to make this happen.

What is the Kernel?

The kernel is a program.

It’s the most important process in the system because it’s responsible for making the whole system run properly. It handles the computer’s devices, the file system, and the network. It also manages all the other processes running on the machine, providing them with resources, starting them and stopping them.

The kernel is actually the heart of an operating system. On its own, it’s not an entire OS – you also have other programs that provide services and create an environment where applications can run.

It’s entirely possible to write a program that runs without an operating system. Such a process would be very complex – it would have to manage the input and output devices itself, running at a very low level. In a system like this, you could only have a single process running at a time.

The earliest computer programs worked like this, and many still do. These programs usually live in “single purpose” systems inside electrical devices – such as a wireless remote control, or a microwave oven.

A single purpose program is very useful in devices we use for a single task. But computers are supposed to be general purpose. Nobody would buy a computer that can only play solitaire.

If you want to run multiple processes at the same time, you need some way of managing them. This is basically what a kernel is. It does more than that – it handles all of the complex details of the computer hardware, too. And it runs the computer’s networking capabilities (through the hardware).

Early mobile phones were single purpose machines. You used them to make and receive phone calls. Then someone decided it would be a good idea to also store numbers on the phone.

At this point, there were two tasks – still managed by a single program. However, maintaining a large program that does many different tasks is a hard task. At some point, phone developers decided it would make sense to build a simple operating system for their devices.

Having an operating system made it easier to add new functions. Jump forwards a few decades, and you have smartphones – which are fully functioning computers with complex operating systems.

As an interesting note, iPhones and Android are both built on Unix-like kernels. IOS is built on XNU, and Android is built on the Linux kernel.

Processes Creating Processes

In a Unix-like machine, processes can create other processes to take care of some part of their job. This is very similar to the way people work in the real world.

If you open a restaurant, you hire people to do the different tasks – cooking food, greeting and serving guests, cleaning, etc. Of course, you don’t “create” people to do these jobs (if it was possible, employers probably would!)

As a user, you interact with a user interface – a graphical one or a text one. This interface is a process (often more than one).

When you launch an application, you actually tell the UI interface to create and run the app’s process. The interface can’t do this on its own – it has to ask the kernel to do it.

Linux distros ship with a large number of programs that are really great at doing simple common jobs. Application developers can achieve complex tasks by delegating smaller tasks to these simple programs. When their application does this, it asks the kernel to create the process and send it data.

This is one of the reasons why Linux and other Unix-based systems are so popular. The toolset is amazing and well understood.

When Computers Go Wild

So far, I’ve painted a very nice Utopian image of how things work inside a Linux machine. An image where dozens of processes work together smoothly to fulfill your every wish. Unfortunately, real life is different.

Computers often go wrong. Instead of doing what you want, they do nothing. Or worse, they do something utterly horrible.

There are three reasons why this happens:

- The computer is broken

- There’s a bug in the software

- Some evil, malicious person has deliberately misused the system to mess with you

All three of these situations happen very often. In security, we’re interested in the third case. The second case is also important – hackers often exploit bugs to make bad things happen.

While this article is about security, you also have to plan for the other two possibilities – we’ll leave that discussion for another day.

Hackers

It would be a wonderful world if everyone respected other people and their property. Nobody would commit any crimes, everyone would be safe all the time.

Unfortunately, that’s not the world we live in. In the real world, there are people who steal and cause harm just because they can. Connecting your computer to a public network makes it a target for these people.

Computer criminals are usually called hackers. There are plenty of people who object to that – the word “hacker” originally meant a computer enthusiast. People still use the word that way.

Thanks to the media, we’re stuck with the “hacker = criminal” mentality. Even the criminals call themselves hackers. So please don’t take offense when I use “hacker” to imply criminal intent.

What are hackers trying to achieve? They want to either:

1. Break your system – prevent your software from doing what it should

2. Make your system do something it shouldn’t

Simply being an evil hacker doesn’t give you magical powers over computers. You would still have to interact with computers in the same way legitimate users do – by sending data to existing processes through the operating system.

This brings us to a very important point – your machine’s attack surface.

The Attack Surface

Hackers can only interact with the processes that run on your system. When you only have a couple of processes running, they only have a couple of targets to work with. If you have many processes running, you give them a bigger target to aim at. We can think of this “target size” as a surface area – the larger the surface, the easier it is to cause harm.

Each new process running on your system increases the hacker’s chances of breaking into your machine.

Now, you may have hundreds or thousands of programs on your machine – it’s unlikely they’re all running at the same time. But these dormant programs are also a part of your attack surface.

If a hacker gains control of a running process, they can use it to launch other processes – they can use these processes to achieve their goals. If the program they need is already installed on your machine, it makes their job much easier.

Let’s imagine an example – let’s say the hacker wants to delete your hard drive. Let’s imagine your system has a program that deletes hard drives (Linux does ship with programs that can do this).

Now the hard drive deleting program is very unlikely to be active when the hacker gains access to your system – unless you were deleting a hard drive at the time! So the hacker has to gain control over an active program first.

Let’s say you’re also running SSH (a program that allows you to log on to a text interface over a network). And, for the sake of simplicity, let’s imagine you allow any anonymous user to log onto your system through SSH.

Now the job becomes very simple: log on to ssh, type the command to run the hard disk deleter.

Clearly, this is a very insecure system!

If the hardware deleter was not already installed, the hacker would have a harder challenge. They would have to:

- Log on to SSH

- Install a hard drive deleter

- Run it

It’s not very hard to do that, but it’s more work.

This example illustrates a point – the more software you have installed on your machine, the easier it is to hack – even if you aren’t using the programs!

Clearly, programs that are designed to destroy your system are dangerous. But even innocent software can be used in an attack.

A screwdriver is an innocent tool, designed to – well, drive screws. But it makes a pretty nasty weapon in the wrong hands.

So the simplest security step is to remove software you don’t need. If the program serves no useful purpose in your system, it shouldn’t be there. Even if it seems innocent, there could be some inventive way to use it harmfully.

Modern operating systems are designed to be used in many different situations, for different purposes. Users hate installing software – they want their machine to work out of the box. So OS developers anticipate their users’ needs and ship a huge selection of popular programs with every release.

If your web host is running a popular Linux distribution, it probably has hundreds of programs you don’t need. This creates a big attack surface!

There are 3 ways to reduce the attack surface:

1. Run fewer processes

2. Uninstall programs you don’t need

3. Build a system from scratch that only has the processes you need

Building an entire Linux distribution is quite a tall order. But you can start with a very minimal system (such as Alpine Linux). Then you add the software packages you do need and ignore the ones you don’t.

That’s quite a challenging chore because you often have to compile programs from their source code. What’s more, you may have to track down obscure dependencies – and that’s a little too awkward for our article today.

Trust

At heart, security is all about trust. Who do you trust? Hopefully, you trust yourself. You know you aren’t going to destroy your own server in a fit of melancholy.

What about your employees? Sure, you trust them, but how much? Not as much as yourself. A disgruntled or anti-social employee could damage your system out of spite.

What about the public? You know some of them, and you know they want your site to succeed. They rely on your services or whatever you provide to them.

But you don’t trust them entirely. You wouldn’t give them the keys to your business empire – maybe they would steal your resources so they didn’t have to pay for them.

Then there are anonymous users. This is the rest of the world’s population – strangers that appear on your site, reading your content. Are they good or bad? There’s no way to be sure.

It’s easy to get paranoid, but it’ not very productive. Instead, you need some kind of policy that controls what people can do with your computer. The people you trust the most can do the most. People you don’t trust should be able to do very little.

What you need is some kind of automatic system that enforces these trust policies. These systems exist – some of them are built into your operating system already.

Users

A user is someone who uses something. In computing, a user is someone who uses a computer.

Computers are basically machines that do what they’re told. Someone tells a computer to do something, and it does. But should a computer always do what it’s told?

It depends on who is telling it, and what they’re telling it to do.

If you own the computer, it should follow your orders. Of course, it’s possible you could accidentally tell the computer to do something crazy – like delete everything on your main hard disk.

So it’s useful to put some limitations on your own access – even if it’s just a question that pops up and says “are you really sure you want to do that?”

Other people should have limited access, based on how much you trust them to act in your best interest.

Computers need some way to know who is giving them orders. They do this through the concept of user accounts and authentication.

When you gain access to a computer, it will identify you as a user based on some kind of identification process. This is called “authentication”. There are different ways to perform authentication – the simplest is asking for a username and password. Complex systems use bio-scanning software to scan your retina, read your fingerprint, or check your birthmarks (or whatever).

Other systems check for cryptographic keys installed on your computer.

A really good authentication system uses multiple tests. Someone could steal or guess your password. They could grab your laptop with its cryptographic keys. Or they could cut off your finger (which would also be a problem).

It’s unlikely they would do all three.

Processes

The idea of user accounts is a useful abstraction. In reality, users can only interact with the system through processes. You don’t step into the machine like a character from the Tron films.

In other words, the Kernel doesn’t deal with users. It deals with devices and processes.

When a program is executed, the kernel creates a process space in memory and loads the program into it. It tags this process with data that identifies which program is running inside. And it also tracks which user created the process.

Processes can only interact with the system’s resources through the kernel. These resources are pretty much everything outside the processes’ memory space (such as files or the network).

When a process asks the kernel to do something, it has to make a decision. Should it comply, or should it refuse the request? It depends on what the request is, and who is asking.

To identify the “who” part of the question, the kernel checks the user account associated with the process. If it’s a superuser (system administrator), it should probably do what it’s told. If it’s an untrusted account, it might refuse.

The kernel makes this decision based on one (or more) access control settings.

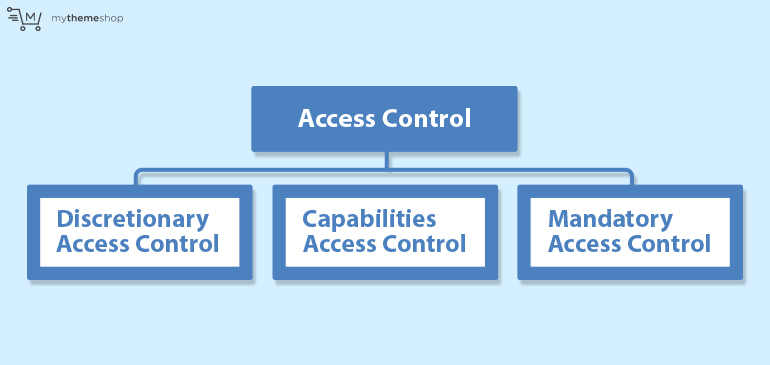

6 Access Control

We previously mentioned automated systems for controlling who can do what. These systems are called “access control”. They control which users can access the resources on your system. At a low-level, resources are files and processes.

There are 3 types of access control that work at a very low-level in Linux. These are:

- Discretionary Access Control (DAC)

- Capabilities Access Control (CAP)

- Mandatory Access Control (MAC)

They’re not mutually exclusive – you can use them together.

Now, the names don’t really explain what they are, so let’s examine each one

Discretionary Access Control

Discretionary access control is built right into the basic Linux kernel – it’s a part of the Unix standard. It’s a way to describe which users can access a file or process on a computer. It’s very tightly coupled with the idea of user accounts. And it introduces the concept of ownership.

Every file is owned by some user. When you log on to a Linux machine and create a file, you’re the owner. File owners can also transfer a file to a different user.

The file’s owner has discretion over who can use it, and how. They control this permission by tagging the file with data that the kernel can read.

Everyone who accesses a computer is a user. They have their own permissions (enforced through DAC). Linux also has the concept of a user group.

Let’s imagine a system with 4 users, Alice, Bill, Charlie, and Delilah.

Alice is the system administrator – she’s the superuser.

Bill and Charlie are software developers. We should group them together because they have a similar job, and they need to cooperate to get it done.

Delilah is a customer.

Alice should have complete control over the system.

Bill and Charley should be allowed to create, edit and delete the source code for their software. And they should be allowed to compile the source code to build applications.

Delilah should only be allowed to download the applications she has bought.

DAC gives us a simple way to enforce these policies.

As Alice is the superuser, she has no restrictions. She basically bypasses the entire DAC system and can do whatever she wants. She can edit source code, delete it, compile it, download the app – basically anything goes for Alice.

She can also create new users, and remove old ones from the system (maybe the company fires Bill and hires Edward).

To Linux, there are only 2 types of user accounts – superuser (or root) and regular user. So, Bill, Charlie, and Delilah are all regular users.

However, you don’t want Delilah to get her hands on the source code, or go crazy with the compiler. So you need to limit her access.

You can do this with file ownership. Delilah is given a directory on the system that she owns. The software she bought is loaded into this directory, and she’s the owner of that file, too.

Every other file belongs to someone else – Bill, Charlie, and Alice.

Permissions

So, how does the kernel know if it is allowed to show files to Delilah? Through permissions.

Under Linux, there are 3 things you are permitted to do to a file. You can read it. You can write to it (this includes deleting it). And you can execute it – turn it into a running process.

Owner Permissions

Delilah should be able to read the app files in her directory – otherwise, she can’t download them! Should she also be able to write to them – to change the files on the server? Nope! She might break them, and then blame the company for it. She can do that on her own machine!

Should she be allowed to execute the file – run it on the company’s server? Again, no. Let her pay for her own computer resources!

Alice’s access should be read-only. If we lay that out in a table, we get the following:

| Read | Write | Execute |

|---|---|---|

| Yes | No | No |

Computers love numbers – everything in a computer is encoded as a number, including file permissions. So the permission is encoded as a binary number that looks like this:

| Read | Write | Execute |

|---|---|---|

| 1 | 0 | 0 |

The binary permission number is 100. That’s not “one hundred” – if you convert that into a decimal number (which humans like) you get 4. Here’s why:

| 4 | 2 | 1 |

|---|---|---|

| 1 | 0 | 0 |

The “1” in “100” means 1 * 4. The first zero means 0 * 2. The last digit – zero – means 0 * 1. 4 + 0 + 0 = 4.

When Delilah attempts to download her application, she uses an FTP program on her computer, that chats with the FTP service running on the server. She tells it to download the file. The FTP service asks the kernel to read the file.

The kernel has to decide if Delilah should be allowed to read the file. So it checks who owns it – Delilah does! Does she have permission to read the file – it’s “owner” permission is set to 4. Yes, she does! So the kernel agrees to the request and sends the file to her.

What if Delilah attempted to upload a file with the same name to her directory? FTP allows people to upload files, so her client program on her computer has no problem with the request. It sends it to the FTP daemon running on the server.

The FTP daemon sends the request to the kernel – Delilah wants to write to the file. The kernel has to make a decision – does Delilah have a write permission on the file?

Well, she is the owner, and the owner has a permission of 4 (100 in binary). Wait a minute, the write bit is set to zero. Delilah does not have write permission! Request denied!!

The kernel tells the FTP daemon to get lost. Fortunately, the FTP daemon has a tough skin and takes rejection well. So it sends a message to Delilah to tell her her request was rejected.

What about the other files on the system – the source code files, for instance?

Public Permissions

Bill has created a file called “my_app.go”. This file lives inside the /source directory, which he owns. He doesn’t want unauthorized users to access the file, so he set the “public” permission to “000” – can’t read, can’t write, can’t execute.

If Delilah attempted to download this file, the kernel would say “No. The read bit is zero.” If she attempted to write to it or execute it, she’d get the same response.

Setting the right public permission is very important – some resources should be available to the public. For instance, if Delilah wanted to read the contents of her folder, she’d have to run the ls program (which lists the contents of a directory). This program is a file on the computer system, usually located at /bin/ls.

To access and run this program, Delilah would have to have the “execute” permission on the /bin/ls file. And so would other users. The permission should be public, as Delilah is not the superuser.

Should she be allowed to write to the file (change the program)? Hell no! She could put a virus inside it. So the public permission code should be:

| Read | Write | Execute |

|---|---|---|

| 1 | 0 | 1 |

That’s 101 in binary, or 5 in decimal.

Group Permissions

So, we’ve seen how DAC applies to Delilah and her downloads.

What about Bill and Charlie?

Bill was the first programmer who worked for the company, and he created the /source directory and the early code. As time passed, the job became too big for one programmer, so they hired Charlie. Charlie has created several of his own files in the /source/ directory, and he also edits Bill’s files from time to time.

As the senior programmer, Bill likes to check up on Charlie’s code, and he often has to correct mistakes.

Bill and Charlie should be able to read, write and execute their own files – the owner permissions on these files should be:

| Read | Write | Execute |

|---|---|---|

| 1 | 1 | 1 |

That’s 111 in binary or 7 in decimal.

However, we’ve already said the public permissions should be set to 000 (or plain old 0 in decimal). So how can they access each other’s files?

A file can only have one owner, after all.

The answer is to create a user group called “developers” and add Bill and Charlie to it. Then tag all the source code files as belonging to this user group.

In addition to owner and public permissions, files have group permissions and group owners.

For these files, every member of the “developers” group should have the same access as the owner – which was 7.

Putting it Together

When we list a file’s permissions, we combine these 3 numbers in this sequence: owner-permission group-permission public-permission.

For Delilah’s application file, the permissions would be:

400

For the source code files, the permissions would be:

770

Directories Are Weird

Remember how I said the “execute” permission means the permission to run code as a process? Well, directories are different. When you “execute” a directory, you’re actually accessing the directories contents.

Files live in directories, and to access them, you have to have “execute” permissions on the containing directory.

Let’s say Delilah’s folder was set to 400

This means she has permission to read (but not write or execute) the folder.

So she can’t rename it or delete it. But she can’t access the files inside it, either!

Here’s the correct owner permission:

| Read | Write | Execute |

|---|---|---|

| 1 | 0 | 1 |

That’s 101, or 5 in decimal. So the correct permissions value would be:

500

Elevated Privileges

Charlie’s having a bad day. First of all, he set the permissions wrong on one of his new source code files – instead of setting it to 770, he used 700.

Next, he fell down an old well. Don’t worry, the rocks broke his fall. The rescue chopper is on the way and he should be able to return to work in a month.

Meanwhile, Bill has to review the new source code and compile it. The only problem is that Bill doesn’t have access to the file – the group permission is 0. In fact, Bill only knows the file exists because he has execute permissions on the directory, so he can list the contents.

Bill could log on under Charlie’s account, but this is a bit dodgy. Also, Charlie’s the only one who knows his password, and he’s in no state to talk to Bill.

So Bill does the only thing he can – he asks Alice to change the permissions for him.

Alice is far from happy – she already has more work on her hands than she can manage, what with this massive system with 4 users and all! But it’s the only way out, so she puts down her Sudoku book and logs on.

There is another option that would save Alice’s precious time. She could authorize Bill to use the sudo command.

Sudo stands for “substitute user do” – it used to stand for “superuser do”, but it’s more useful now. It allows you to temporarily become another user to execute a command. The new process that you launch runs as that other user.

If Alice configures sudo to accept Bill, he could temporarily elevate his access to the superuser level, and run commands as if he were Alice. This allows him to change the permissions on Charlie’s file, as the superuser has no restrictions!

Of course, with great power comes great responsibility – the sudo command can be dangerous. We’ll come back to this concept later. But for now, let’s assume Bill is a trustworthy guy.

In day to day work, developers use sudo for all manner of purposes. Installing new software packages is a major reason. Software packages are usually installed in directories that belong to the superuser. These are where the system software lives, along with programs that are intended for all users on the system.

To install software to these directories, you need super user access. The sudo command gives you the power to install packages from a less-privileged account.

Upgrading Bill’s account to full-time superuser access would be a bad idea. He could make important changes without consulting Alice first. Or he could accidentally cause some kind of damage. By forcing him to type “sudo” before running any important command, he has to take a moment to think about what he’s doing – at least in theory.

A New Group Appears!

One day, the impossible happens. The company manages to get a new customer – Engelbert!

This poses a bit of a problem. Do they create a new download directory for him? Well, the application files are rather big, and they only have a small hard drive. So why not create a new download directory and make it accessible to all their customers (both of them).

If you remember, the permissions on the application file were set to 400, and Delilah was the owner. If Alice simply moved the file to the new directory with the old permissions intact, Engelbert would be unable to download it.

There’s a simple solution:

- Create a new “customers” group. Add Delilah and Engelbert

- Change the owner to Bill – he’s the guy who compiles the file, so he should be able to replace it when he fixes bugs or releases new features.

- Set the permissions to 740

These permissions are:

Owner - read, write, execute

Group - read only

Public - no access

But there’s a problem. When Bill creates files, they belong to him and his group (developers). To allow the customers to download these files, Alice will have to change the group owner to “customers”.

When she does this, Bill will be able to read-write-execute the file, and the customers will be able to download it (read it).

Even though Bill is not part of the customer group, he can still access the file because he is the owner. The user-owner does not have to be a member of the group-owner group!

Inhuman Users

So far, every user we’ve considered represents a real live person. But there are times when you may want to create a non-human user.

Why on Earth would you want to do that?

No, it’s not so you can market to animals and aliens. Remember, every process that runs on a system does so as a user.

The core processes that start when Linux boots usually start as the root user, since they perform admin tasks.

Sometimes you want to start a long running process on a machine, but you want to restrict its access to the system – usually, because it will interact with people you don’t trust.

A web server is an example of such a process. It has to keep running for as long as the machine is on. And you don’t trust the people who interact with it.

Which user should you use to run the web server?

It doesn’t make sense to pick an existing human user’s account because these guys have well-defined jobs. So the solution is to create a new user with a single role – to run that process!

Then you launch the program using this user account – for instance, you could use the sudo command to become the inhuman user for a moment.

This has become a common practice, and most long running background applications are installed and run with their own user accounts.

The package manager usually sets this up for you, so you don’t have to remember it at run time. You type the command in the console, and the program switches user before it starts working.

It uses a mechanism called SUID (set user id) to run as a different user – so you don’t have to remember to type “sudo” when you run the command.

Apache (the popular web server) usually runs as “httpd” or “web-data” in Debian and Ubuntu distributions. Other distros use different naming conventions.

DAC Summary

OK, this example had nothing to do with WordPress! However, it does illustrate how Discretionary Access Control works. Let’s close this section with a simple WordPress example.

Sometimes lazy webmasters upload all their WordPress files with the permissions property set to 777. Can you see why this is a bad idea?

It means every user has full access to the files. They are free to read them, write to them, and execute them.

This makes it painfully easy to corrupt the files, inject malicious code, add executable files into the WordPress directories, and so on.

In a later section, we’ll show you the permissions you should apply to your WordPress files and directories.

Capabilities

We’ve seen how you can use the sudo command to temporarily become the super user. The superuser has the ability to do anything on the system because they bypass DAC entirely.

Of course, giving a regular full access to the system is dangerous. They could cause massive damage, either by accident or on purpose.

On the other hand, there are times when a regular user needs admin powers – adding new software is an example.

Now, in a high-security organization, the solution is to never give out sudo access, and force the administrator to perform all these tasks. Even then, there are services and daemons that require some superuser abilities to work.

Here’s an example – the web server. Web servers have to listen to network traffic over port 80 – a well-known port. In Linux, only superuser processes can bind to well-known ports.

Of course, it’s extremely dangerous to allow a web server to run as the root user – an attacker could use it to gain control of the system.

So we have a catch-22. Do you run Apache as a root user so it can listen to port 80, or do you run it as a regular user and take a higher port number, like 2345.

When people visit your site, the browser requests content on port 80. If you were serving content on port 2345 instead, you’d have to use ugly URLS like this: http://www.mysite.com:2345/my_content.html.

The problem goes back to Linux’s origins, in Unix. Unix is pretty old – it emerged in the 70s, and was based on ideas from earlier operating systems. Computer systems were far simpler back then, so it made sense to see the world in terms of black and white – superusers and regular users.

Many of the Unix design principles have aged very well, but this isn’t one of them. To make web servers and other similar programs secure, we need some shades of gray. Otherwise, we can’t apply the principle of least privilege.

This is where capabilities come in. Capabilities are a newer concept that breaks down what it means to be a superuser. A superuser has many capabilities – things they can do which ordinary users can’t.

Of course, I keep talking about users. What I mean is “processes”. A user only interacts with the system through processes – those processes the user starts are tagged with their name and permissions.

Capabilities allow you to grant some of the powers of a superuser to a process, regardless of who the user is. We only give the process the abilities it needs.

Capabilities are granted to programs with the setcap command – this command is applied to the program’s file directly, and the capabilities are loaded with the program when it executes.

If the program has already started before you use setcap, you will have to restart it to see the changes.

It’s worth noting that this is usually done for you during the installation process. But you have the power of changing these settings manually.

Capabilities are a standard part of the Linux kernel – there’s no need to install any additional software.

Mandatory Access Control

DAC doesn’t apply to the superuser. This is a deliberate design choice in Linux, but it opens the door to a wide array of attacks. A hacker can hijack a process with superuser capabilities (such as the command “password”) and then modify its code while it’s running. This is hard to do, of course, but there are ways to achieve it.

By changing the code, they change the behavior. They can make it do things it shouldn’t.

There are two common tactics:

Privilege Abuse:

The hacker uses the processes privilege level to do bad things a regular user couldn’t. If the process is running as the owner of a set of files, or a group owner, they can do things with those files that a less privileged user can’t – like change them or delete them.

In any case, this type of abuse is only possible because of the existing privilege level of the process.

Privilege Escalation:

The hacker uses some method to increase the process’s privilege or to launch a new process that has a higher level. In any case, the goal is to abuse the new, higher privilege level.

One common tactic is to force it to open another program that is configured to run as root. Suddenly the hacker is logged on as a superuser – able to do literally anything on the system.

DAC’s “all or nothing” approach to privilege is at the root of the problem. Mandatory access control (MAC) is one solution to this issue. It complements DAC and Linux capabilities to limit the damage a superuser can do.

There are several different flavors of MAC, including Rule-Based Access Control and Role-Based Access Control – which confusingly have the same acronym (RBAC)!

SELinux

SELinux is the most comprehensive example of MAC – it stands for security-enhanced Linux. It was developed by the NSA, who have very specific security requirements. They also know a thing or two about bypassing security!

Under SELinux, every resource on the computer is given two labels. A resource is a file or program (programs exist as files when they are not running in memory). One label describes the classification of the file (such as sensitive, top secret, unclassified, etc). Another label describes who is supposed to use the file (such as a task force, manager, work group, individual, etc).

Each user has a set of labels, too. These describe their role and what level of secrets they are allowed to access.

Labels exist in hierarchical levels. Teams are members of larger groups. Managers are members of these groups, but they have senior access. Groups are parts of departments – and so on.

In addition, the system administrator sets up a detailed list of rules. These rules explain the organization’s security policy in terms of these labels.

SELinux is a kernel security module that mediates how processes can act on the system. When a process sends a system call to the Kernel, it first checks it’s built-in DAC rules. If the request is OK according to DAC, the kernel asks the SELinux module whether it’s OK.

SELinux looks at the labels that apply to the process, the action it’s trying to accomplish, and labels for the resource. Then it looks up the appropriate rules. If it can’t find a rule that specifically allows the action, it sends a negative response to the kernel. SELinux’s default response is to deny any request.

If the response is negative, the kernel sends an error message to the process.

SELinux is extremely comprehensive, but this power comes at a price. It’s difficult to learn, complex to set up, and time-consuming to maintain. What’s more, it has to be updated to reflect changes within the organization – and to reflect the organization’s changing practices and security policies. If someone reorganizes the work teams, it can take days for the sysadmin to catch up!

Of course, this gives sysadmins something to do when they run out of Soduku puzzles!

AppArmor

AppArmor is an example of a MAC tool, although it’s nowhere near as comprehensive as SELinux. It applies rules to programs to limit what they can do.

You can configure AppArmor to limit processes to certain areas of the file system, for instance. You can tell the kernel which files the process is allowed to read, write, delete or execute. You can specify which ports it can open. And you can control its ability to launch other processes.

AppArmor can set or revoke specific Linux capabilities for a process. You can even limit how much memory a program can use.

When a process requests an action from the kernel, it first checks to see if DAC allows it. If the DAC request passes, then it checks the AppArmor rules.

Unlike SELinux, you don’t have to create labels for every resource on your disk. Instead, you use pathnames to specify the files a process can and can’t access.

This is simple and easy, but there’s a risk you could overlook some sensitive file or directory. SELinux would catch this kind of omission with its system of labels and denying all requests.

AppArmor is controlled through simple language. You create a configuration file (or “profile”) for each risky process. Many files have been created and shared by the community, so you don’t have to write one from scratch.

However, you may want to increase the restrictions – the community files are deliberately loose to allow a wide range of use cases. In your own projects, you have specific needs, and these are unlikely to change. So you can afford to tighten the restrictions. (We’ll show you an example of this in a later section.)

AppArmor comes with tools to help you get your settings right. You can check your configurations with the “complain” mode.

In this mode, AppArmor will not block a process from doing something it thinks is not OK. Instead, it will log the violation (or complain about it).

You can test a configuration by deliberately carrying out routine operations and checking if any complaints were generated. If they were, you need to loosen the restrictions and try again.

Eventually, you will find a configuration that allows the software to do the stuff it needs to do, and stop it from doing anything bad.

In the case of WordPress, you should try out all the different actions you would usually perform on your site:

- Make a blog post

- Edit a blog post

- Make a page

- Add and remove widgets

- Change themes

- Download a theme

- Download a plugin

- Activate and deactivate plugins

- Update WordPress

- Moderate comments

etc.

Of course, you may have a very customised WordPress installation, with lots of proprietary code. You may require special permissions that other sites can do without. In any case, you need to check and see if your policy is too restrictive.

AppArmor has a learning tool that uses the “complain” mechanism to generate a set of policies. It starts by assuming the most restrictive policy possible. You use the app, as mentioned above. While the app runs, AppArmor generates complaints about every system call, logging the request and privilege the process required.

When you have run the app through its paces, you tell AppArmor you’re done. It will then automatically generate a security profile, granting the process privileges for every action mentioned in the logs.

You can manually tweak this profile, tuning it for greater security and readability.

AppArmor is relatively easy to set up, but it does require you to configure each application and program one by one. This puts the onus for security in the hands of the user or sysadmin. Often, when new apps are added, users forget to configure AppArmor.

Or they do a horrible job and lock themselves out, so their only option is to disable the profile.

That said, several distributions have adopted AppArmor. They create profiles for every application in the repository, so users install a new AppArmor profile automatically.

Generic profiles shipped by repo teams are designed to cover a wide range of different use cases, so they tend to be fairly loose. Your specific use cases are usually more specific. In this case, it pays to fine-tune the settings, making them more restrictive.

GRSecurity

GRSecurity is a suite of security enhancements. Many of them are designed to make it harder for hackers to take control of running processes. These modules protect against malformed data, shellcode, memory overflow attacks, and exploits that deliberately execute code out of intended sequence.

The access control part of GRSecurity is similar to AppArmor, in that you create profiles for programs, describing what they can or can’t do. GRSecurity also has a learning mode. Unlike AppArmor, you don’t have to train GRSecurity with each app one at a time. Instead, you run your system as normal. After a period of time, GRSecurity will have gathered enough information to understand the required permissions for the entire system. It will create a security profile for you.

Just like AppArmor, it makes sense to examine these profiles and tweak them by hand.

GRSecurity is a commercial product, but there is an unofficial port, which is integrated into the Alpine Linux package. By “unofficial port”, I mean an independently developed project that does the same thing – not an illegal rip-off.

As GRSecurity and the port both draw on the same open source projects, they’re virtually identical in capabilities, stability, and reliability.

GRSecurity is also easier to use than AppArmor – and both are much simpler than SELinux.

Building a secure web server on top of Alpine Linux is a little complex – since the distribution is less popular, there are fewer precompiled packages. You have to compile the software from source, and it can get quite complex.

For this reason, we won’t attempt it in this article. In the future, this could become a viable option.

For now, we’ll use Ubuntu and AppArmor.

Application Level Access Control – Apache

Aha, you thought I’d finished talking about access control! Well, I almost have.

Everything I’ve covered so far has dealt with access control at the operating system level. This constitutes your last line of defense, and it’s incredibly important to enforce the rules at this level.

Apache is a user-facing service – it’s how your users interact with your website. It’s important to control this interaction too.

WordPress is made up of multiple files sitting in publicly visible folders. These folders contain code that WordPress requires to function. They include your site’s configuration, plugins and themes, and core WordPress files.

The web server must be able to access them because it has to load the code into the PHP runtime – otherwise, your site will break. So blocking Apache from accessing them is not an option.

But unauthorized public should never be able to read these directories or the source code contents of the files in them.

When users navigate through your site in an innocent fashion, they click on links and see your content. But a hacker could attempt to view your WordPress folders directly. Knowing your site’s address, it’s quite easy to type in an address and browse through the contents of your folders.

This is default Apache behavior, to generate a directory index when there’s no index.html or index.php file.

If your Apache configuration is bad, these files can be viewed as plain text. All of your code will be visible for anyone to see – this potentially includes your database credentials, cryptographic keys, and salts. Keeping this data secret is vital – otherwise hacking your site becomes trivially easy.

You can configure Apache to refuse any requests for these essential directories using .htaccess files. These are folder-level configuration files that Apache reads before it replies to a request. You can modify most of Apache’s settings in .htaccess files, and these settings only apply to requests for content in that folder. It’s a cool feature that enables all kinds of interesting behavior.

The primary use for .htaccess files is to control access (the clue’s in the name!).

In a later section, I’ll show you exactly how to do that.

Application Level Access Control – WordPress

WordPress has its own access control features built into the core files, and you can extend them with plugins.

Before you can write a page or post, you have to log on to your admin panel. In the standard setup, this requires a username and password. Using a secure password is essential – avoid words or phrases that can be guessed easily.

You can also add a second layer of authentication – some plugins will send a special code to your phone as a text message, for instance. This means that simply having the password is not enough. An attacker would also have to steal or clone your phone.

That’s great, but there are some exploits that bypass the login process entirely. If an attacker knows your WordPress cryptographic salts, they can use fake cookies to trick WordPress into thinking they have logged on already. Without your password, they have to use brute force, trying multiple possible combinations at a rate of 30 attempts per second.

As these attempts do not generate error messages, it’s hard to track them. Of course, you’ll notice the increased network traffic. And you can configure your firewall to block any single machine that makes so many simultaneous connections. But a good hacker knows how to cover their tracks (using a botnet, for instance).

Applying the concept of defense in depth, you can limit the damage a hacker can cause if they do manage to log on. You can prevent them from installing plugins or themes (with corrupted code) by changing some settings inside your wp-config file. You can also use AppArmor to block the Apache process from changing the code on your storage.

The downside is that you’ll have to install plugins or change themes manually – but that’s a small price to pay. I’ll show you how you can make the process painless in a later section.

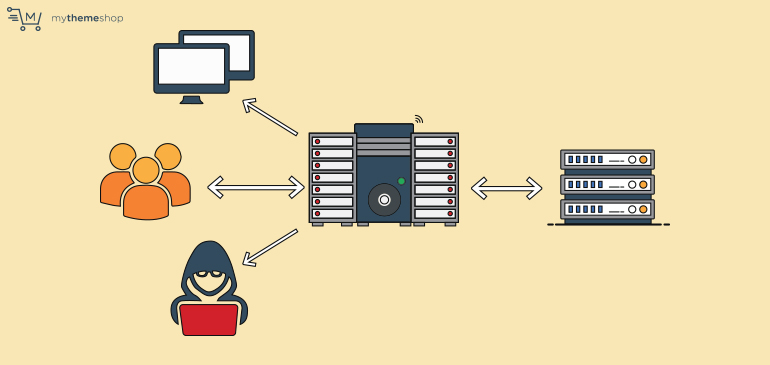

7 Network Based Attacks

So far, we’ve covered how attackers harm computer systems through running processes. So how do attackers interact with these processes?

Data enters the system through hardware devices. Keyboards and mice are input devices – and so is the network card.

If the hacker has physical access to the computer, they have many options at their disposal. They can type commands through the keyboard, or insert a disk or USB stick into the machine and launch an attack that way.

When it comes to network-based attacks, attackers have to reach through one of the machine’s network ports. Ports are a routing mechanism that computers use to ensure messages reach the right process on a computer.

A typical computer system has dozens of processes running at any one time, and many of them will communicate over the network. Often, these processes expect a reply. Other processes wait patiently until someone connects and requests data.

IP addresses allow the network infrastructure to deliver messages to the correct computer. But how do these messages make their way to the right process? They do this through a port number.

Inside the computer, the incoming data packets are sent to processes that are “listening” to these ports. If no process is registered, the data packet is deleted.

Imagine the computer is a massive apartment building, with thousands of units inside. Some of them are occupied, and others are empty. They all share the same street address (IP address). But each unit has its own number.

When a process starts up, it can request a port number from the kernel. If another process is already listening on that port, the kernel will signal an error.

If the port’s free, the kernel assigns it. Some ports are privileged, and the process will not be granted access unless it’s running as a superuser, or with elevated privileges.

Client processes send requests to server processes. They can ask for data or send it – the server is supposed to do something with the data that it receives.

Clients can use any random port, but servers have to listen on a specific port. Otherwise, nobody would know how to route a message to them!

The kernel doesn’t know or care if a process is a client or a server. It just handles the routing. It delivers the data and lets the processes deal with it in their own way.

When a packet arrives on a computer, it has a port address. If there’s a process listening on that port, the kernel sends a signal to the process and delivers the data.

If we go back to the apartment building example, it’s like the porter calling the apartment and telling them to pick up a parcel.

If there’s no process listening, the kernel simply deletes the data.

For an attacker to exploit a system, they have to communicate to a process that’s listening on a port. Otherwise, they’d simply be sending messages that are ignored.

This is why you should only run processes that you need for your site to run. Anything else is a security risk. It forms a part of your attack surface.

There are literally thousands of network-aware programs that could be running on a computer, and there’s no way to know in advance which ones are there. What’s more, many of them will be listening on an unexpected port address – ports can be assigned randomly.

So hackers often employ a tool called a port scanner. This service systematically sends messages to a range of ports on potential target machines. They can be set to check popular ports or to scan every port.

When the target machine replies, the scanner knows that port is occupied. After a short time, the hacker has a complete picture of the machine’s network attack surface. This allows them to plan and execute their attack.

Often, ports are occupied by processes that provide no real valuable service to the machine’s legitimate users. This tends to happen when you install a large distribution designed for multiple uses.

Large distros include software that is useless to you in terms of running a website. So the best strategy is to start with a very lightweight distro and add the components you need.

Some of these processes can be exploited. So it’s vital to identify them and shut them down.

You can get a list of processes connected to your machine’s ports with this command:

netstat -tulpn

If you see any unnecessary processes, you can shut them down manually. Better yet, if the process is completely unnecessary, you can remove it from your system.

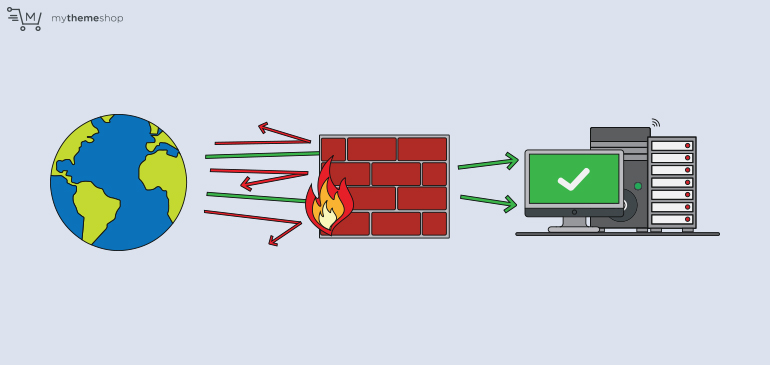

8 Firewalls

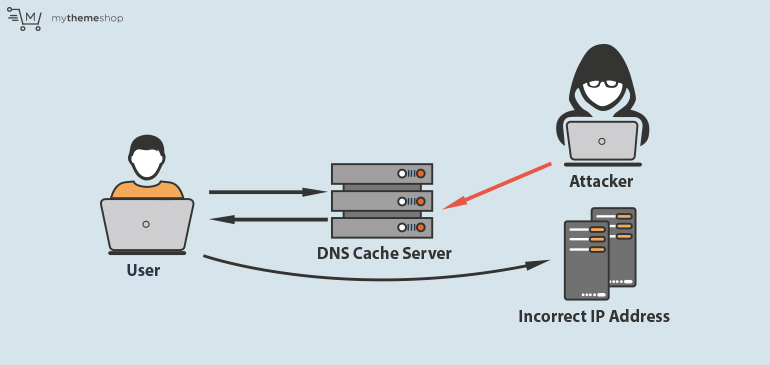

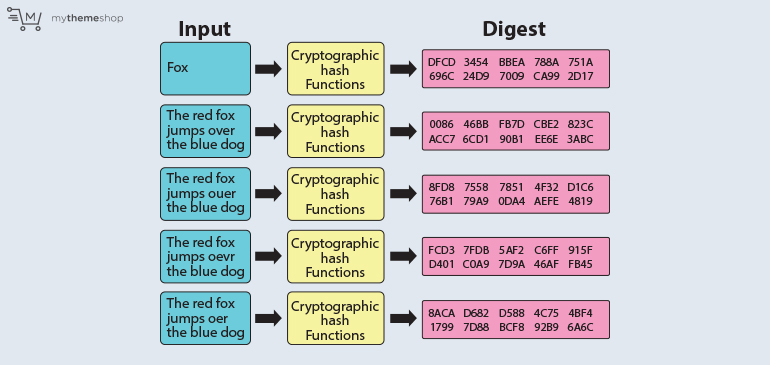

It’s easy to overlook a stray software package and end up with an exposed port. It’s also easy to misconfigure a networked app, leaving it open to attack.